Criteria-based Content Analysis in True and Simulated Victims with Intellectual Disability

[El análisis de contenido basado en criterios en víctimas reales y simuladas con discapacidad intelectual]

Antonio L. Manzaneroa, M. Teresa Scottb, Rocío Valleta, Javier Arózteguia, and Ray Bullc

aUniversidad Complutense de Madrid, Spain; bUniversidad del Desarrollo, Chile; cDerby University, United Kingdom

https://doi.org/10.5093/apj2019a1

Received 28 August 2018, Accepted 26 November 2018

Abstract

The aims of the present study were to analyse people’s natural ability to discriminate between true and false statements provided by people with intellectual disability (IQTRUE = 62.00, SD = 10.07; IQFALSE = 58.41, SD = 8.42), and the differentiating characteristics of such people’s statements using criteria-based content analysis (CBCA). Thirty-three people assessed 16 true statements and 13 false statements using their normal abilities. Two other evaluators trained in CBCA evaluated the same statements. The natural evaluators differentiated between true and false statements with somewhat above-chance accuracy, even though error rate was high (38.19%). That lay participants could not effectively discriminate between false and true statements demonstrates that such assessments cannot be considered useful in a forensic context. The CBCA technique did discriminate at a better level than intuitive judgements. However, of the 19 criteria, only one significantly discriminated. More procedures specifically adapted to the abilities of people with intellectual disabilities are thus d. The presence of structured production, quantity of details, characteristics details and unexpected complications increased the probability that a statement would be considered true by non-expert evaluators. The classification made by the non-expert evaluators was independent of the participants’ IQ. A big data analysis is performed in search for better classification quality.

Resumen

Este trabajo tiene como objetivos examinar la capacidad natural para discriminar entre declaraciones verdaderas y falsas de personas con discapacidad intelectual (CIVERDADERO = 62.00, DT = 10.07; CIFALSO = 58.41, DT = 8.42) y las características diferenciales de tales declaraciones utilizando los criterios de análisis de contenido de la técnica CBCA. Treinta y tres personas valoraron 16 declaraciones verdaderas y 13 falsas utilizando su intuición. Otros dos evaluadores entrenados en CBCA valoraron las mismas declaraciones. Los evaluadores no expertos diferenciaron entre declaraciones verdaderas y falsas con una precisión por encima del azar, aunque el índice de errores fue elevado (38.19%). El hecho de que los evaluadores no entrenados no pudieran discriminar eficazmente entre declaraciones falsas y verdaderas demuestra que la intuición no puede considerarse útil en un contexto forense. La técnica CBCA discriminó mejor que los juicios intuitivos. No obstante, solo uno de los 19 criterios permitió discriminar de modo significativo, por lo que se necesitan más procedimientos adaptados específicamente a las aptitudes de los testigos con discapacidad intelectual. La presencia de producción estructurada, cantidad de detalles, características específicas de la agresión y complicaciones inesperadas incrementaba la probabilidad de que una declaración fuera considerada verdadera por los evaluadores no expertos. La clasificación realizada por los evaluadores no entrenados fue independiente del cociente intelectual de los participantes. Se lleva a cabo un análisis de macrodatos para mejorar la calidad de la clasificación.

Palabras clave

Análisis de la credibilidad, Juicios intuitivos, Discapacidad intelectual, Criterios de contenido, Macrodatos.

Keywords

Credibility assessment, Intuitive judgments, Intellectual disability, CBCA Content criteria, Big data.

Cite this article as: Manzanero, A. L., Scott, M. T., Vallet, R., Aróztegui, J., & Bull, R. (2019). Criteria-based content analysis in true & simulated victims with intellectual disability. Anuario de Psicología Jurídica, 29, 55-60. https://doi.org/10.5093/apj2019a1

Correspondence: antonio.manzanero@psi.ucm.es (A. L. Manzanero).

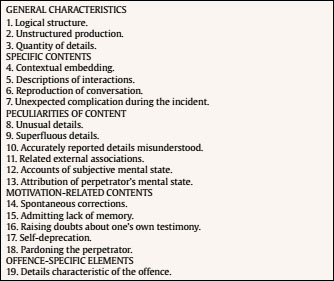

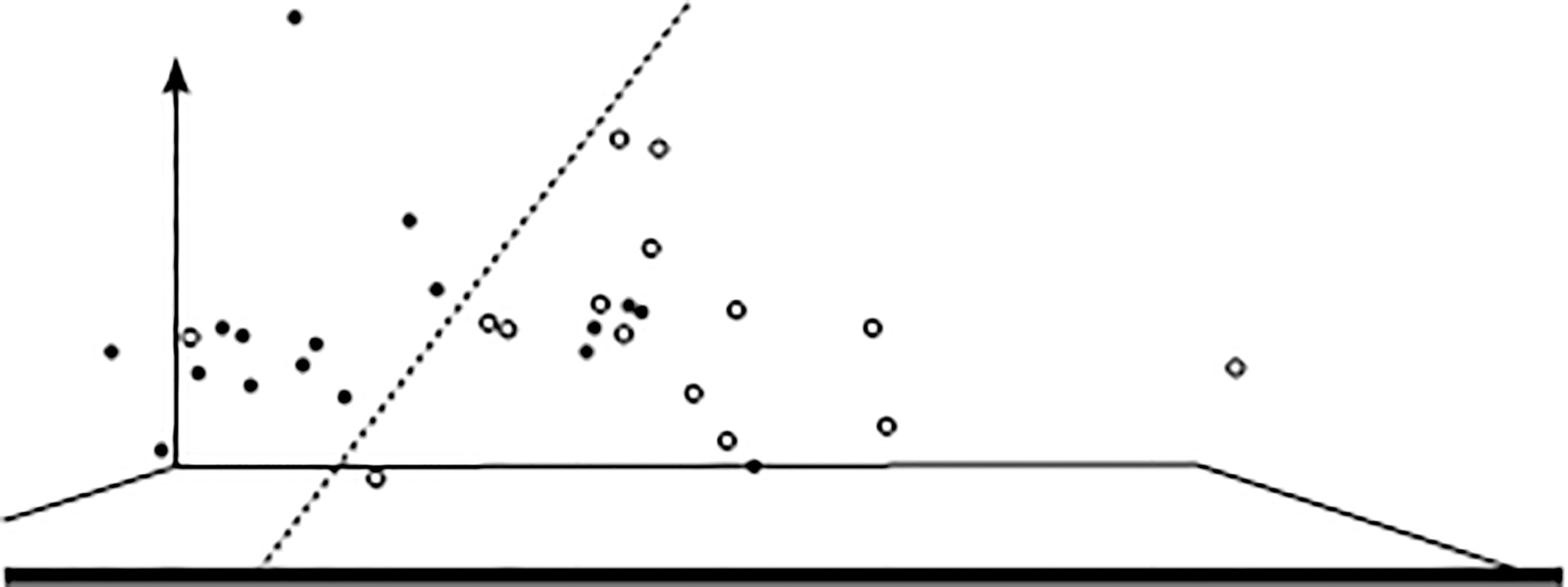

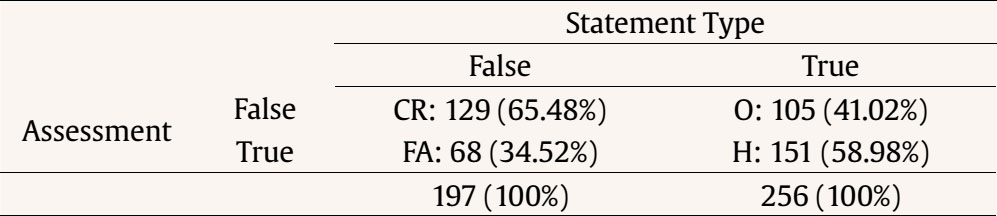

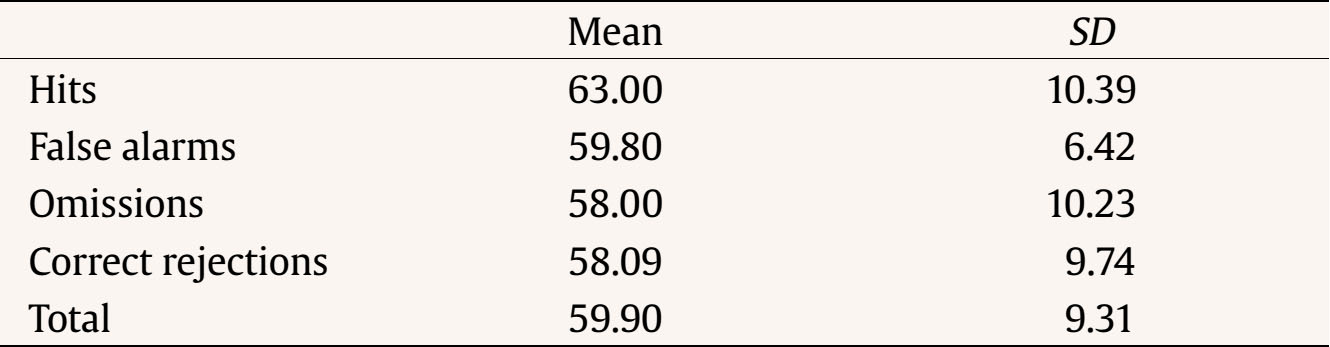

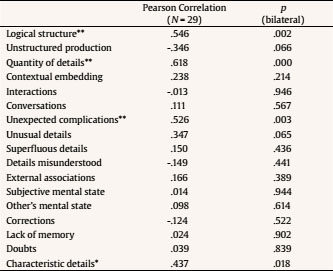

Introduction People with intellectual disability (ID) are, for some crimes, victimised more than the general population and are involved in many police/legal proceedings as victims for such crimes (González, Cendra, & Manzanero, 2013). A large proportion of these proceedings never reach trial. Probably, one of the main reasons for these rejections stems from the lack of adaptations of police and judicial procedures to the characteristics of these people (Bull, 2010; Milne & Bull, 2001, 1999), as well as from the actual myths that society has about the limited ability of people with ID to testify with accuracy (Henry, Ridley, Perry, & Crane, 2011; Peled, Iarocci, & Connolly, 2004; Sabsey & Doe, 1991; Stobbs & Kebbell, 2003; Tharinger, Horton, & Millea, 1990; Valenti-Hein & Schwartz, 1993). In many cases, the testimonies associated with people with ID have been considered less credible (Peled et al., 2004). On the other hand, one myth implies that people with ID may be more believable (Bottoms, Nysse-Carris, Harris, & Tyda, 2003). Some studies (Manzanero, Contreras, Recio, Alemany, & Martorell, 2012) have shown that people with ID may perform approximately the same as others in forensic contexts. Moreover, their autobiographical memories may be quite stable over time, being their ability to describe an event independent of the degree of disability (Morales et al., 2017). Indeed, Henry et al. (2011) found no correlation between credibility assessment and either witness mental age or anxiety. For eyewitnesses with ID, the key may be the lack of studies regarding differentiating characteristics of their true/false statements. With other types of population (mainly children), forensic psychology has proposed useful procedures for assessing credibility by analyzing the content of statements. One of these procedures is Statement Validity Assessment (SVA) (Köhnken, Manzanero, & Scott, 2015; Steller & Köhnken, 1989; Volbert & Steller, 2014), a technique that assesses the credibility of statements given by minors who are alleged victims of sexual abuse. SVA is a comprehensive procedure for generating and testing hypotheses about the source and validity of a given statement. It includes methods of collecting relevant data regarding such hypotheses and techniques for analyzing these data, plus guidelines for drawing conclusions regarding the hypotheses. Criteria-based content analysis (CBCA) is a method included in SVA for distinguishing truthful from fabricated statements. It is not applicable for distinguishing statements experienced as real memories, which are actually the result of suggestive influences (Scott & Manzanero, 2015; Scott, Manzanero, Muñoz, & Köhnken, 2014), but may be applied complementarily to other procedures (Blandón-Gitlin, López, Masip, & Fenn, 2017). The use of the CBCA content criteria in the absence of a detailed analysis of the moderator variables would produce rather low percentages of discrimination between true and false statements, where around 30% of false alarms have been found (Oberlader et al., 2016). Previous research has shown that the level of accuracy in the classification of true and false statements can sometimes be low even when evaluators are specifically trained in this technique, which could indicate that CBCA has basic problems (Akehurst, Bull, Vrij, & Köhnken, 2004). Table 1 Content Criteria for Statement Credibility Assessment  CBCA takes into account 19 content criteria grouped into five categories (see Table 1): general characteristics, specific contents of the statement, peculiarities of content, motivation-related contents, and offence-specific elements. The basic assumption of the CBCA is that statements based on memories of real events are qualitatively different from statements not based on experience (Undeutsch, 1982). According to his original proposal, each content criterion is an indicator of truth; its presence in a given statement is viewed as an indicator of the truth of that statement, but its absence does not necessarily mean the statement is false. This assumption has been shown to be incomplete, because it does not consider false memories as a source of incorrect statements, nor the effects of liars knowing about the criteria (Vrij, Akehurst, Soukara, & Bull, 2004a). However, not all the criteria are always relevant when it comes to discriminating (Bekerian & Dennett, 1992; Manzanero, 2006, 2009; Manzanero, López, & Aróztegui, 2016; Porter & Yuille, 1996; Sporer & Sharman, 2006; Vrij, 2005; Vrij, Akehurst, Soukara, & Bull, 2004b); the presence of these criteria depends on a host of moderator variables (Hauch, Blandón-Gitlin, Masip, & Sporer, 2015; Oberlader et al., 2016). Among these variables are preparation (Manzanero & Diges, 1995), time delay (Manzanero, 2006; McDougall & Bull, 2015), the individual’s age (Comblain, D’Argembeau, & Van der Linden, 2005; Roberts & Lamb, 2010), and the asking of questions and multiple retrieval (Strömwall, Bengtsson, Leander, & Granhag, 2004). Also, fantasies, lies, dreams, and post-event information do not each involve the same differentiating characteristics. Furthermore, changing a small detail, however important it may be, of a real event—such as whether the role played in the event was witness or protagonist (Manzanero, 2009)—is not the same as fabricating an entire event. Indeed, false statements rarely are entirely fabricated but originate, in part, from actual experiences that are modified to create something new. In addition, the characteristics of statements vary depending on the person’s ability to generate a plausible statement. This is relevant to people with ID, it having been proposed that lying would usually be cognitively more complex than telling the truth (Vrij, Fisher, Mann, & Leal, 2006) and, therefore, would involve a greater demand for cognitive resources (Vrij & Heaven, 1999). The aims of the present study were (i) to use CBCA in order to analyze the statements given by true and simulating witnesses with intellectual disability, (ii) people’s intuitive ability to discriminate between the two types of statements, and (iii) the ability to discriminate through big data analysis. MethodVideo recorded accounts provided by 32 people with mild to moderate, non-specific intellectual disability were used as material to be analyzed. Fifteen participants were true witnesses to a real event that took place two years ago when the bus they were travelling during a day trip caught fire. Those participants had an average IQ of 62.00 (SD = 10.07) and were 33.93 years old (SD = 6.49). Seventeen other participants who provided simulated accounts of the same event had an average IQ of 58.41 (SD = 8.42) and were 31.75 years old (SD = 7.07). No significant differences were found in IQ as a function of condition, F(1, 30) = 1.204, p = .281, η2 = .039. The IQ scores were obtained by the Wechsler Adult Intelligence Scale (WAIS-IV; Wechsler, 2008). All of these 32 participants provided informed consent. The statements were obtained with a procedure similar to that used in other studies (Vrij et al., 2004a, 2004b), as follows: All the participants who did not go on the day trip knew the event beforehand, because they knew the people involved as they belong to the same care centre for people with intellectual disabilities. The event was very commented by everyone when it took place and it was even informed in the media. In any case, a verbal summary of the most important information about the day trip, such as its location, the main complication on the day trip, and the course of the day was given to all participants of either condition. To increase the ecological validity of the study, all 32 participants were encouraged to give their testimonies as best they could. While they were not put under the stress of trying to make the interviewer believe their testimony (to prevent undue tension in the interview), we told them they would be invited to a soda if they succeeded in convincing the interviewer that they had, in fact, experienced the event (all of them actually received this invitation). Two forensic psychologists, experts on interviewing and taking testimony, from the Unit for Victims with Intellectual Disability, interviewed each of these 32 participants individually. An audiovisual recording was made of all interviews. The same instructions were followed: “We want you to tell us, with as much detail as you can, from the beginning to the end, what happened when you went on the day trip and the bus caught fire. We want you to tell us even the things you think are not very important.” Once a free-recall statement was obtained, all participants were asked the same questions: Who were you with? Where was it? Where did you go? What did you do? What happened afterwards? The forensic psychologists who conducted the interviews were blind to the groups (true vs. false experience) the participants belonged to. Once the testimonies were obtained, the videos were evaluated using two different procedures: a) intuitive analysis carried out by people without knowledge of forensic psychology and b) technical analysis performed by forensic psychologists using CBCA criteria. Of the 32 statements discussed above, two videos of the true condition and one of the false condition were removed from the intuitive judgments. This was due to communication problems that prevented the evaluators from understanding what the participants said in the conditions in which the intuitive evaluation was carried out. Intuitive Credibility Assessment There were 33 participants as evaluators (6 men and 27 women; age average 23.54, SD = 4.04), recruited among psychology students in Spain, who wanted to voluntarily participate in the study. They did not receive any compensation for participating, and had no specific knowledge of credibility analysis techniques and no specific understanding of intellectual disability. The video recordings of sixteen true and thirteen false statements were shown on a large-format screen at the university. All evaluators attended the showing at the same time, but they were prevented from interacting so that they did not bias each other while making their individual assessments. The instructions were as follows: “Next, a series of videos will be shown in which people with intellectual disability are talking about an event related to a bus accident. Some of the statements were given by individuals who experienced that event; the others were given by individuals who, although they were not there, were told about the event, and they have given their statement with the intention of making us believe they were there. The task is to decide who is telling the truth and who is lying to us. As you are assessing each statement, bear in mind that the interviewees are all people with intellectual disability, so their way of telling things may be special.” The twenty-nine videos were shown in random order to prevent a learning effect from impacting the ability to evaluate true and false statements. After each video was shown, the evaluators were asked to categorize the statement as true or false. In the first evaluations, it was observed that the viewing of 29 videos produced saturation and fatigue in the evaluators. To avoid this circumstance leading to random decisions, it was decided to submit to each evaluator a maximum of 15 videos, taking care that finally all the videos were evaluated. In any case, the evaluators were warned that when they felt very tired, they should warn the experimenters. A total of 197 evaluations of the true condition and 256 evaluations of the false condition were collected. Analysis of Phenomenological Characteristics of the Statements Using CBCA Criteria The interview video recordings were transcribed to facilitate analysis of the phenomenological characteristics of the statements. Two trained CBCA evaluators each made their own criteria assessment of each statement and then reached an interjudge agreement. To assess the CBCA criteria codings for inter-coder reliability, an agreement index was computed as follows: AI = agreements / (agreements + disagreements). For all the variables, this was greater than the cut-off of .80 (Tversky, 1977), except for “logical structure” and “unstructured production”, where it was .67. Each criterion was assessed in terms of its absence or presence in the statement, as was originally defined by Steller and Köhnken (1989). To measure the degree of presence of each criterion, the evaluators quantified how many times the criterion was present throughout the report. For the criteria of “quantity of details”, the micropropositions that described, as objectively as possible, what happened in the actual event were used, which is a better measure than counting words because it is not influenced by the descriptive style used by participants. Criterion 13, “attribution of perpetrator’s mental state”, was modified to be “attribution of other’s mental state”. Criterion 19, “details characteristic of the offence”, was modified to be “details characteristic of the event”. Criteria 17 (self-deprecation) and 18 “Pardoning the perpetrator”, were not taken into consideration, because of the nature of the event. ResultsCBCA Characteristics of the Statements An ANOVA test was conducted to assess the effects of the type of statement on the number of times each CBCA criterion was present in each report. As multiple comparisons were conducted, the significance level was adjusted with a Bonferroni adjustment to .003. Table 2 shows only “quantity of details” was significant in determining truth. The remaining 16 criteria (some of which rarely occurred) produced no significant differences. Table 2 Means, Standard Deviations, and ANOVA Values for Each Dependent Variable  *p < .003 (Bonferroni adjustment for pairwise comparisons). Big Data Analysis of Characteristic Features of Statements Big data techniques aim towards complex data exploration and analysis. High-Dimensional Visualization (HDV) graphs facilitate the visualization of complex data. This technique displays all the data at once, enabling researchers to graphically explore in search of data distribution patterns (for more information see Manzanero, Alemany, Recio, Vallet, & Aróztegui, 2015; Manzanero, El-Astal, & Aróztegui, 2009; Vallet, Manzanero, Aróztegui, & García-Zurdo, 2017). The graphs are similar to scatter plots. The different variables corresponding to a subject’s responses on questionnaire items are represented as a point in a high-dimensional space (17 values or dimensions in this study). When there are more than three variables, as in this study, mathematical dimensionality reduction techniques are used to build a 3D graph (Buja et al., 2008; Cox & Cox, 2001). Each point in the hyperspace has a distance to each of the other points. Multidimensional scaling will search 3D points, preserving the distances between points as much as possible (Barton & Valdés, 2008). Sammon’s error (Barton & Valdés, 2008) is used to calculate the 3D transformation error. 3D points are represented using Virtual Reality Modelling Language (VRML). VRML files allow graphical rotation and exploration to facilitate graphical data analysis. 3D graphs permit visual exploration of the data in search of its distribution patterns. Figure 1 represents all criteria, regardless of whether their discriminating values were statistically significant. The quality of the dimensionality reduction through multidimensional scaling (Buja et al., 2008) was very good, with a small Sammon’s error of .03. The dotted line graphically dividing true statements from false statements shows correct classification of 81.25 percent (simulated statements were classified as being true 29.42%). Figure 1 HDV Graph of Content Criteria in True (Light) and False (Dark) Statements, Including all CBCA Criteria.  Note. Sammon´s error = .030; correct classification = 81.25%. A possible explanation for several of the CBCA criteria not discriminating could stem from the variability among participants. As can be seen in Figure 1, the cloud of dots that graphically represents each type of statement is very dispersed and overlapped. Intuitive Credibility Assessment Considering the 197 evaluations of the true- and the 256 evaluations of the false videos, discriminability accuracy (hits, false alarms, omissions, and correct rejections), discriminability index (d’), and response criterion (c) as specified by Signal Detection Theory (MacMillan & Kaplan, 1985; Tanner & Swets, 1954) were measured. Analysis of the credibility assessments based on lay participants’ natural ability found above chance accuracy for the discriminability index (d’) was .626 (SD = .121), Zd = 5.159, p < .05. The response criterion (c) reached a score of .086 (SD = .061), Zc = 1.412, p = ns The subjects had a neutral response criterion (scores equal to 0 indicate a neutral criterion, greater than 0 a conservative criterion, and less than 0 a liberal criterion). The proportion of statements correctly classified was 61.81 percent (see Table 3), with 65.48% of false statements being correctly assessed and 58.98% of the true ones. Table 3 Intuitive Responses for Each Type of Statement  Note. CR = correct rejection; O = omission; FA = false alarm; H = hit. Depending on the number of times a story was considered true or false by the intuitive judges, the probability of “truthfulness” was established (number of times considered true / number of evaluations made for that testimony). The average probability of truthfulness assigned to the false testimonies was 36.37 (SD = 31.64), while that assigned to the true ones was 64.00 (SD = 23.93), F(1, 28) = 6.750, p < .05, η2 = .200. The levels of disabilities of the persons with ID could be one of the indicators on which the evaluators based their intuitive assessments. However, no significant effects were found when participants’ IQ was analysed based on how their statements had been classified, considering the four possible types of response (H, FA, O, and CR), F(3, 26) = 0.498, p = ns, η2 = .056. As can be seen in Table 4, IQ means were similar for all groups. Table 4 IQ Means and Standard Deviations of the Subjects according to the Type of Response Issued by the Evaluators  Relationship between CBCA Criteria and Intuitive Credibility Assessment The Pearson correlation (bilateral) between the degree of presence of each CBCA criteria in the testimonies and the probability of truthfulness indicates that the evaluators’ natural ability may have been mediated by the following criteria: “structured production”, r(29) = .546, p < .01; “quantity of details”, r(29) = .618, p < .01; “unexpected complications”, r(29) = .526, p < .01; and “characteristic details”, r(29) = .437, p < .05. No significant correlations were found for the remaining 13 criteria (see Table 5). The greater presence of these criteria would imply greater intuitive truthfulness. Table 5 Discussion Pearson Correlations between Content Criteria and Intuitive Assessments of “True”  *p < .05 (bilateral); **p < .01 (bilateral). In line with many other studies (not involving truth tellers/liars with ID), the lay participants could not discriminate between false and true stories at a level to be considered useful in a forensic context (Rassin, 1999), this being one of the reasons why CBCA was developed. The CBCA technique did indeed discriminate at a better level. However, of the 19 criteria, only one (“quantity of details”) was found significant. This criterion, which is present in some lies, also deemed “richness in detail”, has also been identified as potential biases which may lead to incorrect veracity judgements (Nahari & Vrij, 2015). “Quantity of details” was found in the present study to be significant for people who have ID, even though when truly narrating an event, they tend to give fewer details than the general population (Dent, 1986; Kebbell & Wagstaff, 1997; Perlman, Ericson, Esses, & Isaacs, 1994). ID is a component of certain syndromes that have associated deficits in language development and articulation. This might explain why several of the CBCA criteria were rarely present in the current study. In Down’s Syndrome, for example—the most common genetic syndrome with an ID component—language disorders are one of the effects. In spontaneous conversation, the speech of people with ID is less intelligible, and they have more difficulty with grammatical structuring (Rice, Warren, & Betz, 2005)—in fact, their problems with sentence structuring are similar to those of individuals diagnosed with language development disorder (Laws & Bishop, 2003). Thus, if the criteria that help us to determine the true statements of people with ID indeed is “quantity of details”, what could happen if their true accounts are compared with true accounts from the general population? For those with ID who have reduced vocabulary, semantic, and autobiographical memory deficits (rendering them unable to detail the event), we could run the risk that such people will suffer an erroneous judgment of their credibility, and thus, revictimisation could result. However, since the natural ability evaluators were capable of discriminating between true and false statements at only 12% above-chance accuracy, a procedure that achieves better accuracy is needed. If we were to extrapolate such natural ability data to a law enforcement setting, for example, we could predict that the testimonies of people with ID would be correctly assessed in only 60 percent of cases, resulting in many true accounts not being believed. This percentage is not far from what the police (and others) usually reach when judging the statements of people with standard development (Manzanero, Quintana, & Contreras, 2015). To analyse what the possible basis is for intuitively assessing the testimonies of people with ID—which, in turn, is going to determine the credibility assessments granted in forensic and legal settings—we correlated the probability of a “true” assessment with the IQ and the CBCA content criteria. As in the study by Henry et al. (2011), the results showed that IQ did not account for the lay evaluators’ decisions. In relation to the different CBCA criteria, only four criteria appear to mediate intuitive truthfulness (structured production, quantity of details, unexpected complications, and characteristic details). On the other hand, big data analysis reached a better classification score. It must be taken into account that, surprisingly, these results were obtained after considering all CBCA variables, not only the ones yielding significant differences, although, initially, it was expected that the variable showing significant differences should lead to a better classification in comparison with the rest. Because that was not the case, it seems that useful information is held by those other variables not showing significant differences and the big data technique is able to profit from it, providing better classification quality. This approach could maybe allow to find, in a near future, an improved way of distinguishing true and false statements. Cite this article as: Manzanero, A. L., Scott, M. T., Vallet, R., Aróztegui, J., & Bull, R. (2019). Criteria-based content analysis in true and simulated victims with intellectual disability. Anuario de Psicología Jurídica, 29, 55-60. https://doi.org/10.5093/apj2019a1 References |

Cite this article as: Manzanero, A. L., Scott, M. T., Vallet, R., Aróztegui, J., & Bull, R. (2019). Criteria-based content analysis in true & simulated victims with intellectual disability. Anuario de Psicología Jurídica, 29, 55-60. https://doi.org/10.5093/apj2019a1

Correspondence: antonio.manzanero@psi.ucm.es (A. L. Manzanero).

Copyright © 2026. Colegio Oficial de la Psicología de Madrid

e-PUB

e-PUB CrossRef

CrossRef JATS

JATS