Combining the Devil’s Advocate Approach and Verifiability Approach to Assess Veracity in Opinion Statements

[Combinando los enfoques de abogado del diablo y verificabilidad para evaluar la veracidad de declaraciones basadas en opiniones]

Sharon Leal1, Aldert Vrij1, Haneen Deeb1, Oliwia Dabrowna1, and Ronald P. Fisher2

1University of Portsmouth, UK; 2Florida International University, USA

https://doi.org/10.5093/ejpalc2023a6

Received 14 November 2021, Accepted 22 July 2022

Abstract

Aim: We examined the ability to detect lying about opinions with the Devil’s Advocate Approach and Verifiability Approach. Method: Interviewees were first asked an opinion eliciting question to argue in favour of their alleged personal view. This was followed by a devil’s advocate question to argue against their alleged personal view. Since reasons that support rather than oppose an opinion are more readily available in people’s minds, we expected truth tellers’ responses to the opinion eliciting question to include more information and to sound more plausible, immediate, direct, and clear than their responses to the devil’s advocate question. In lie tellers these patterns were expected to be less pronounced. Interviewees were also asked to report sources that could be checked to verify their opinion. We expected truth tellers to report more verifiable sources than lie tellers. A total of 150 participants expressed their true or false opinions about a societal issue. Results: Supporting the hypothesis, the differences in plausibility, immediacy, directness, and clarity were more pronounced in truth tellers than in lie tellers (answers to eliciting opinion question sounded more plausible, immediate, direct, and clear than answers to the devil’s advocate question). Verifiable sources yielded no effect. Conclusions: The Devil’s Advocate Approach is a useful tool to detect lies about opinions.

Resumen

Objetivos: El artículo analiza la capacidad para detectar el engaño en declaraciones de testigos basadas en opiniones con el enfoque del “abogado del diablo” y el de la verificabilidad. Método: A un grupo de entrevistados se le pidió que argumentaran a favor de su opinión personal. A un segundo grupo se le requirió que, haciendo de abogado del diablo, argumentaran en contra de su punto de vista personal. Dado que los argumentos favorables a la opinión personal son mentalmente más accesibles que los contrarios, esperábamos que las respuestas honestas de los testigos incluyeran más argumentos y resultaran más plausibles, inmediatas, directas y claras que las respuestas haciendo de abogado del diablo. Por su parte, en la condición de respuestas falsas esperábamos que estos patrones fueran menos pronunciados. Además, se solicitó a los entrevistados que informaran de los medios en los que podría verificarse su opinión. Esperábamos que los testigos de la condición de verdad aportaran más fuentes verificables que los de la condición de mentira. Participaron en el estudio un total de 150 sujetos que manifestaron su opinión verdadera o falsa sobre un tema de relevancia social. Resultados: Los resultados confirmaron la hipótesis planteada: los testigos honestos prestaron declaraciones más plausibles, inmediatas, directas y claras que los falsos (las respuestas de los entrevistados que argumentaron a favor de su opinión personal resultaron más plausibles, inmediatas, directas, y claras que las respuestas haciendo de abogado del diablo). Sin embargo, no se observaron efectos del factor testigo en las fuentes de verificación. Conclusiones: El enfoque de abogado del diablo es una herramienta útil para la detección de opiniones falsas.

Keywords

Lying about opinions, Plausibility, Immediacy, Directness, Clarity

Palabras clave

Mentir sobre opiniones, Plausibilidad, Inmediatez, Franqueza, Claridad

Cite this article as: Leal, S., Vrij, A., Deeb, H., Dabrowna, O., & Fisher, R. P. (2023). Combining the Devil’s Advocate Approach and Verifiability Approach to Assess Veracity in Opinion Statements. The European Journal of Psychology Applied to Legal Context, 15(2), 53 - 61. https://doi.org/10.5093/ejpalc2023a6

Correspondence: aldert.vrij@port.ac.uk (A. Vrij).

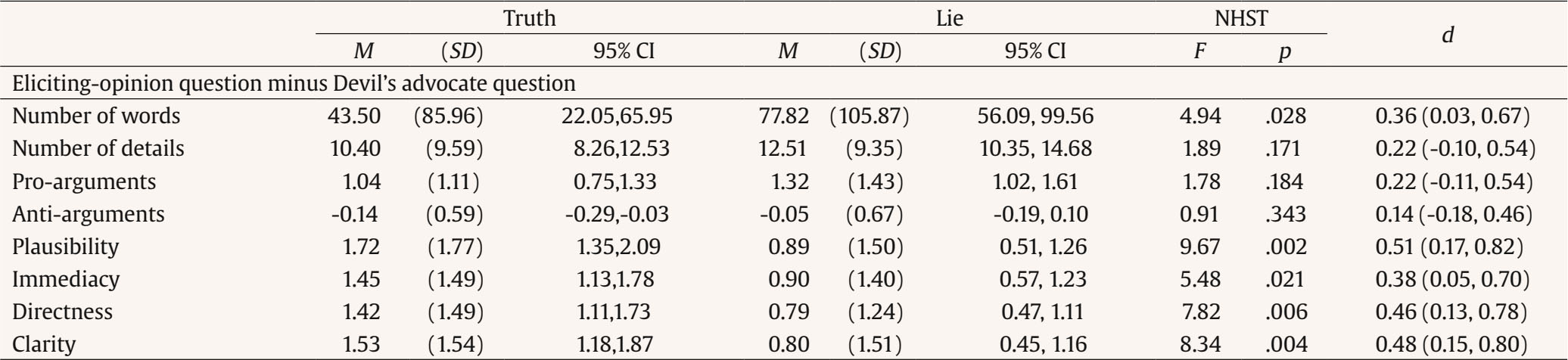

Nonverbal and verbal cues to deceit are typically weak and unreliable (DePaulo et al., 2003; Vrij & Fisher, 2023; Vrij et al., 2019). Researchers have therefore proposed to design interview protocols that enhance such cues or elicit new cues (Vrij & Granhag, 2012). To date, four widely examined interview protocols are available and they all assess verbal cues to deceit (Vrij, Granhag, et al., 2022): assessment criteria indicative of deception (Bogaard et al., 2019; Colwell et al., 2015), cognitive credibility assessment (Vrij et al., 2017), strategic use of evidence (Granhag & Hartwig, 2015), which includes the variant tactical use of evidence (Dando & Bull, 2011), and the verifiability approach (Nahari, 2019). All these protocols have in common that they are used to detect deceit when people discuss their alleged activities. However, practitioners informed us that they are also interested in detecting lies about opinions, referring to various contexts such as border control interviews as well as source recruitment, source handling, and insider threat interviews. Examples of this would be: “Is the person really against terrorism?”; “Is the person really against violent protests?”; “Is the person really against corruption?”; “Is the person really in favour of the British government?”. Interview protocols to examine lying about opinions are scarce, with the devil’s advocate approach being a noticeable exception (Deeb et al., 2018; Leal et al., 2010; Mann et al., 2022). The devil’s advocate approach has been examined only twice with single interviewees (Leal et al., 2010; Mann et al., 2022) and needs replication. In addition, the verifiability approach may have potential to be used to detect lying about opinions. In the present experiment we combined the devil’s advocate approach and the verifiability approach. The Devil’s Advocate Approach The devil’s advocate lie detection approach consists of two questions (Leal et al., 2010). First, after an interviewee gives his/her opinion (e.g., “I am in favour of Covid-19 vaccinations”) an “eliciting opinion question” is asked to investigate the reasons for having that opinion: “Please provide all the reasons you can think of that led you to having this opinion.” This request is then followed by a “devil’s advocate” question: “Try to play the devil’s advocate and imagine that you do not have this view at all. Provide all the reasons you can think of why you may not favour this view.” The answers to the two questions are compared with each other and different patterns of results are expected for truth tellers and lie tellers. In a devil’s advocate interview truth tellers provide honest answers to the eliciting opinion question (the arguments that support their opinion) and honest answers to the devil’s advocate question (the arguments that could be used to speak against their opinion). Although truth tellers present arguments in the devil’s advocate answer they do not believe in, they still can be considered truth tellers because they do not pretend to believe in these answers. Lie tellers try to fool interviewers: they have an opinion opposite to what they express. Therefore, in the eliciting-opinion answer they provide arguments they do not actually believe in. When answering the devil’s advocate answer they are invited to give arguments against their alleged opinion, which is an invitation to express their true opinion. Yet, they are still lie tellers because they have to pretend not to believe in the arguments they present when answering the devil’s advocate question. Truth tellers should find it easier to answer the eliciting opinion question than the devil’s advocate question. Arguments that support someone’s attitude (opinion eliciting question) are typically more at the forefront of someone’s mind than reasons that oppose someone’s attitude (devil’s advocate question). This is the result of confirmation bias, people’s tendency to seek information that confirms rather than disconfirms their views (Darley & Gross, 1983). The confirmation bias makes people more practiced in thinking about arguments that confirm their views and such arguments are processed at a deeper level than arguments that disconfirm their views. Other explanations lead to the same outcome. For example, arguments that confirm people’s views are probably more compatible with their other related views and beliefs than arguments that disconfirm their views. It could thus be expected that truth tellers will generate more arguments and details and use more words when discussing the eliciting opinion than the devil’s advocate question. Processing arguments at a deeper level could result in answers to the eliciting opinion question sounding more plausible, immediate, direct, and clearer than their answers to the devil’s advocate question. Lie tellers express an attitude that is opposite to what they really think. For example, someone who thinks that violent protests are a necessary method to achieve an aim but who conveys in an interview the impression to be “against” violent protests is lying about their actual opinion. For lie tellers, the devil’s advocate question (in this example, ‘Give me arguments in favour of violent protests’) is an invitation to provide their true opinion and the arguments should be readily available. Yet, we do not predict that lie tellers’ results will show the opposite pattern to truth tellers’ results, because lie tellers will be motivated to use at least two counter-interrogation strategies: preparation and consistency (Deeb et al., 2018). Lie tellers typically attempt to come across as being sincere during interviews (Leins et al., 2013), and one way to achieve this is by preparing themselves for interviews (Clemens et al., 2013). This preparation typically takes place by preparing answers to possible questions (Clemens et al., 2013). It is therefore likely that lie tellers will think prior to the interview about arguments that support their pretended opinion. This should improve the quality of their eliciting opinion answers. Lie tellers typically think that inconsistency is considered a sign to deceit (Strömwall et al., 2004) and therefore strive to be consistent (Vrij et al., 2021). Consistency in the devil’s advocate interview protocol would mean providing answers of similar quality to the eliciting opinion and devil’s advocate questions. Therefore, after providing their prepared arguments in answer to the eliciting opinion question, lie tellers may attempt to produce a similar quality answer to the devil’s advocate question. This pattern of results (truth tellers report higher quality answers to the eliciting opinions question than to the devil’s advocate question whereas lie tellers’ answers to these two questions sound more similar) was indeed obtained by Leal et al. (2010) and Mann et al. (2022). Note that in the devil’s advocate lie detection approach ‘consistency’ indicates deception, opposite to what lie tellers typically think. Verifiability Approach The core assumption of the verifiability approach is that lie tellers find it more difficult and are less willing than truth tellers to provide details an investigator can check. Checkable details are activities (i) carried out or (ii) witnessed by another named person; (iii) captured on CCTV cameras or (iv) that leave behind traces (e.g. receipts, bank card transactions, phone calls; Vrij & Nahari, 2019). In terms of difficulty, an interviewee who committed a crime at location A but says in the interview that he was elsewhere at the time of the crime will find it impossible to provide details that conclusively demonstrate he was elsewhere at the time of crime. In terms of willingness, lie tellers may be reluctant to provide checkable details that are false (bluffing) out of fear that an investigator will notice this bluff when checking the provided false information. Meta-analyses showed that truth tellers indeed provide more verifiable details than lie tellers (Palena et al., 2021; Verschuere et al., 2021), particularly in criminal alibi settings (Verschuere et al., 2021) The same effect has also been found in other settings such as insurance settings (Harvey et al., 2017) and border control settings (Jupe et al., 2017). It has not been examined in opinion-statement settings before, but we expect it to also work in such a setting. People often share their opinions about societal issues with others either in face-to-face conversations or via social media (e.g., Facebook) and truth tellers should thus be able to provide such evidence. In the present experiment we explicitly asked interviewees whether they could present sources that would back up their stories. One meta-analysis has shown that asking interviewees to present verifiable details strengthens the veracity effect because it leads to only truth tellers to provide more verifiable information (Palena et al., 2021) but a second meta-analysis did not replicate this effect (Verschuere et al., 2021). Mann et al. (2022) also asked participants whether they could back up their stories with verifiable sources and found a significant effect: truth tellers provided more verifiable sources than lie tellers. Whereas in Mann et al. (2022) the verifiable information question was always the last question asked in the interview, we manipulated when to ask for verifiable information. We asked half of the participants this question before the two devil’s advocate approach questions and the other half of the participants after these two questions. We expected the location of the verifiability instruction to have an effect. Asking the verifiable details question at the beginning of the interview may present a dilemma to lie tellers. They may bluff and pretend that they have shared their opinion with others. This may limit what they can say later in the interview when discussing their opinions out of fear that their lies will be exposed when the verifiable sources they mentioned are checked. Alternatively, lie tellers may decide not to provide verifiable sources. This will give lie tellers more freedom when discussing their opinion, but they may fear that the lack of verifiable sources provided sounds suspicious. Hypotheses The experiment was pre-registered (pre-registration: https://osf.io/qmn3f; registration: osf.io/8h2vq). The following four pre-registered hypotheses were tested: - Veracity main effect: Truth tellers will provide fewer anti-arguments (arguments against their expressed opinion), more pro-arguments (arguments in favour of their expressed opinion), more total details, more verifiable sources, and more plausible, immediate, direct, and clear statements than lie tellers (Hypothesis 1). - Order main effect: Participants in the devil’s advocate approach followed by the verifiability approach condition will provide more anti-arguments, more pro-arguments, more words, more total details, more verifiable sources, and more plausible, immediate, direct, and clear statements than participants in the verifiability approach followed by the devil’s advocate approach condition (Hypothesis 2). - Veracity × order interaction effect: The predicted veracity effects are expected to be moderated by task order. Specifically, the effects are expected to be larger when the verifiability approach instruction is presented before the opinion eliciting prompt (Hypothesis 3). - Devil’s advocate approach effect: Truth tellers will provide fewer anti-arguments, more pro-arguments, more words, more total details, and more plausible, immediate, direct, and clear statements in response to the opinion eliciting question than to the devil’s advocate question. These differences between questions will be less pronounced for lie tellers (Hypothesis 4).1 Participants According to a G*Power analysis, a minimum of 148 participants should take part for the experiment to have statistical power (.90) and a medium to large effect size (f2 = .09) based on previous research (e.g., Leal et al., 2010; Nahari et al., 2014). We recruited 159 participants of whom nine did not follow the instructions correctly. We deleted them from the sample. The final sample included 150 participants of whom 33 (20.8%) were male and 114 (71.7%) were female (three did not say). Their ages ranged from 18 to 68 and their mean age was M = 26.85 years (SD = 8.26). The participants described themselves as White British (n = 52, 32.7%), Asian (n = 25, 15.7%), White European (n = 19, 11.9%), White (n = 17, 10.7%), Black British (n = 9, 5.7%), African (n = 8, 5.0%), Arab (n = 8, 5.0%), mixed (n = 7, 4.4%), Hispanic (n = 2, 1.3%), or other (n = 1, 0.6%) (two did not say). Participants were recruited via the departmental database and university staff and student portals. They were awarded £10 for their participation and entered into a prize draw for £50, £100, and £150 vouchers. The university’s faculty ethics committee granted approval. All procedures performed in studies involving human participants were in accordance with the ethical standards of the institutional and/or national research committee [Science and Health Faculty Ethics Committee University of Portsmouth, UK, SHFEC 20210043] and the experiment’s procedures were in alignment with the principles of the 1964 Declaration of Helsinki and its later amendments or comparable ethical standards. Informed consent was obtained from all individual participants included in the study. Procedure The experiment took place online via Zoom. Participants completed via Qualtrics a 20-items opinion questionnaire asking their opinion about each topic on a 7-point rating scale from (1) totally disagree to (7) totally agree (see Appendix for the full list of statements). They were sent this questionnaire at least 24 hours in advance of the interview. Participants were randomly allocated to the two veracity conditions. For truth tellers the experimenter selected one topic with the strongest support or opposition and asked the participants to express their opinion about this topic truthfully during the interview. For lie tellers the experimenter again chose one topic with the strongest support or opposition and asked the participants to pretend that they have the opposite opinion during the interview. For example, suppose the participant indicated that s/he strongly supports Covid-19 vaccinations. Truth tellers were then instructed to tell the truth during the interview about their views about Covid-19 vaccinations. Thus, they would inform the interviewer that they were strongly in favour of Covid vaccinations before providing arguments why they support these in the eliciting opinion question. In the devil’s advocate (DA) question the truth teller would try to think of arguments against their true opinion of supporting Covid 19 vaccines. Lie tellers were instructed to pretend that they were against Covid-19 vaccinations. Thus, they would lie and inform the interviewer that they were strongly against Covid vaccinations before providing arguments why they are against these in the eliciting opinion question. In the DA question the lie teller had to come up with arguments in favour of the vaccines, which of course in reality was his/her true opinion even though they pretend it was not. All participants gave at least one topic a score of 1or 7 on the opinion questionnaire. We tried to choose the discussed topics equally such that each topic was discussed an equal number of times by participants. This was not always possible with topics 10 (n = 3) and 11 (n = 4) relatively unfrequently chosen and topics 6 (n = 12) and 17 (n = 11) relatively frequently chosen (see Appendix for the list of topics). Lie tellers were matched with truth tellers on the topics. Matching was deemed necessary to rule out that possible veracity differences were caused by the topic discussed (arguments are perhaps easier to generate for one topic than for another). The 150 participants combined discussed all 20 topics listed in Appendix. Participants were informed about the importance to appear honest during the interview. If they did so (if the interviewer believed their stories) they were told to be entered in a prize draw (vouchers worth £50, £100, and £150). If the interviewer did not believe them, they should write a statement about their opinion instead. In reality, all participants were entered in the draw and nobody had to write a statement. Participants were then given as much preparation time as they wished. During that time the experimenter informed the interviewer which opinion to discuss in the interview without telling the interviewer the veracity status of the participant (hence keeping the interviewer blind to the interviewees’ veracity status). When the participants indicated they were ready to be interviewed, they completed a pre-interview questionnaire measuring demographics (age, gender and ethnicity), motivation, preparation thoroughness, and preparation time. Motivation was measured with the question “To what extent are you motivated to perform well during the task?”. The answer could be given on a 5-point scale ranging from 1 (not at all motivated) to 5 (very motivated). Preparation thoroughness was measured with three items: 1 (shallow) to 7 (thorough); 1 (insufficient) to 7 (sufficient); and 1 (poor) to 7 (good). The answers to the three questions were averaged (Cronbach’s alpha = .94). We also asked participants whether they thought they were given enough time to prepare themselves for the interview with the following question: ‘Do you think the amount of time you were given to prepare was: 1 (insufficient) to 7 (sufficient)?’. We included this question because participants could have felt pressurised not to take up too much preparation time in the experiment. After completing the pre-interview questionnaire, the interviewer joined the Zoom meeting and the experimenter left the call. The interviewer started the interview as follows: “I understood from the experimenter that you are strongly in favour of/opposed to (opinion)”. This was followed by three questions: Q1) “Please provide all the reasons you can think of that led you to having this opinion?” (eliciting opinion question); Q2) “Try to play the devil’s advocate and imagine that you do not have this view at all. Provide all the reasons you can think of why you may not favour this view?” (devil’s advocate question); and Q3) “Is there any information you can provide that I can check and that supports your statement that you are strongly in favour of/opposed to (opinion)? Verifiable information would be named people, social media activity, activities you carried out that can be tracked and so on?” We systematically varied the order of the two questions that make up the devil’s advocate approach and the verifiability approach question. In the DA first condition, the questions were asked in the following order: Q1 (eliciting opinion), Q2 (devil’s advocate), and Q3 (verifiability) (labelled DAA-VA). In the VA first condition, the order of questions was Q3 (verifiability), Q1 (eliciting opinion), and Q2 (devil’s advocate) (labelled VA-DAA). After the interview, the experimenter sent the participants the Qualtrics post-interview questionnaire measuring rapport with the interviewer, percentage of truth telling, and likelihood to be entered in a prize draw or having to write a statement. Rapport is considered important for a productive interview (Brimbal et al., 2019). It was measured via the nine-item Interaction Questionnaire (Vallano & Schreiber Compo, 2011). Participants rated on 7-point scales ranging from 1 (not at all) to 7 (extremely) the interviewer on nine characteristics such as satisfied, friendly, active, and positive. Cronbach’s alpha = .82. Participants also reported the estimated percentage of truth telling during the interview (during all three questions combined) on an 11-point rating scale ranging from 0% to 100%. Participants finally rated whether they thought they had to write a statement using a 7-point Likert scale ranging from 1 (not at all) to 7 (very much). Participants then read the debrief form and were informed by the experimenter that they were believed by the interviewer. All participants were entered into the prize draw and received the £10 payment. Coding All interviews were audio recorded, transcribed, and coded. The ratings occurred per question. For the eliciting opinion and devil’s advocate questions we measured number of words, number of details, number of arguments in favour of their alleged opinion, number of arguments against their alleged opinion, and plausibility, immediacy, directness, and clarity. For the verifiable sources question we measured the number of sources reported. The number of words uttered by the participant in answering the eliciting opinion and devil’s advocate questions were counted via the Word software. Two independent coders rated the remaining variables. For details one coder coded all 150 transcripts and another coder coded 38 transcripts (25%). For the other variables each coder rated all 150 transcripts. The coders who were blind to the interviewees’ veracity status and hypotheses counted the “number of details” in the statements. A detail was defined a unit of information that relates to the opinion. This implies that in the example below details such as ‘a year ago’ and ‘I changed my mind’ are not coded because they are not related to the actual opinion. The following statement contains 11 details: Yeah so maybe a year ago I was following this whole thing. But then I changed my mind because I thought a little bit more about it. And I thought that by empowering one minority we are basically putting down another. So the whole thing of Black Lives Matter is to kind of show that Black Lives Matter stuff. But I think that the statement is false because obviously not only the black lives matter but all lives matter. And I understand might be- communication problem but I also noticed by recently kind of empowering black people black history and stuff we are kind of discouraging white people as I can say like that. So I just completely disagree with the statement. And obviously the story behind this is equality that everyone have equal rights and equal rights to live and for everything else so and I believe that by stating this we’re basically kind of disagreeing with ourselves and yeah. The coders also counted the number of arguments. Examples are “You can’t control who you’re attracted to” (about having a homosexual friend); “I do believe in vaccines in general” (about Covid-19 vaccinations); and “Privatising the NHS would lead to other companies having too much control over our healthcare system” (about privatising the UK National Health Service). The arguments were divided into “the number of pro-arguments and number of anti-arguments”. Pro-arguments are arguments given in favour of their opinion (most likely given in the eliciting opinion answer) and anti-arguments are arguments given that go against their opinion (most likely given in the devil’s advocate answer). “Plausibility” was defined as “Does the answer sound reasonable and genuine and was there enough of an answer to sound convincing?”; “Immediacy” was defined as “Personal and not distanced”; “Directness” was defined as “To the point and not repetitive or waffle”; and “Clarity” was defined as “How clearly does the reader understand what the participant was saying by the end of the answer?”. These definitions were taken from DePaulo et al. (2003). The four items were measured on 7-point Likert scales ranging from 1 (not at all) to 7 (very much). The following statement was considered high in plausibility, immediacy, directness, and clarity (opinion about Covid vaccinations, opinion statement 14 in Appendix): Okay so there’s this research that I found which was from Johnson and Johnson which stated that six women developed blood clots in their brain up to three weeks post vaccine. And I believe that situations such as these are a lot more common than people think. The Covid vaccine is a fairly new vaccine like cause Covid I don’t feel like they’ve done enough research in the vaccine and like yes there are some people that it may have worked for but I’m trying to think of it long term and how it might affect us long term. And cause obviously Covid is a very new disease that just came out. And who’s to say in like five or 10 years time the people that have got the vaccine won’t be experiencing very harsh side effects like we don’t know how this vaccine will impact your bodies and your brain and how you functions or I just feel like in a way that getting these vaccines can be quite dangerous. And as well the people that have got it has reported to having quite a lot of side effects such as pain for feelings in your arm after you get the vaccine feeling tired headaches aches flu like symptoms muscle pains growing pains fevers and all of those kind of stuff. So like I just don’t know if it’s worth it cause of the side effects that you get. And even if you evaluate the whole Covid situation and the death rates and stuff like most people that get Covid anyway they don’t actually die from it at all like if you’re younger the death rates are like zero point something and you probably have more of a chance getting hit by a car than dying of Covid. And it’s just very low so if you’re young so I’m just thinking like maybe it’s not worth especially like if you don’t know the potential complications that you could get from getting Covid in the future. Most people that get Covid they get very mild symptoms and they can recover quite easily. So I just feel like if that’s the case and like what’s the point of getting it especially if you’re younger demographic and if you’re are mindful of your health I just don’t know if it’s worth taking a vaccine that I believe is not well researched on. Yeah. This statement was considered plausible (a score of 6 was given) because the person mentioned several reasons while elaborating on them. The statement was considered immediate (a score of 6 was given) because the statement was personal. The statement was considered direct (a score of 5 was given) because it was reasonably concise and not repetitive. The statement was considered clear (a score of 6 was given) because the statement was clearly understandable. The following statement (answer to the devil’s advocate question) was considered low in plausibility, immediacy, directness and clarity (opinion about inclusion policy at schools, opinion statement 10 in Appendix): Sometimes taking children out of mainstream schools and putting them in like a pupil referral unit can work for some those that are definitely for the works for the mainstream young people because it takes the distraction away. But I think keeping those young people in those schools is a good thing for those mainstream peoples as well to be able to kind of contend with distraction also. But yeah so sometimes it works kind of taking them out and putting them into a pupil referral unit but not all the time. Providing an alternative education I suppose would be a kind of favour for not being in mainstream but that that’s the only kind of thing I could kind of argue really. The statement was considered implausible (a score of 1 was given) because the person contradicted him/herself ‘takes the distraction away’ and ‘contend with distraction’. The statement was considered low (score of 3 was given) in terms of immediacy because it lacked personal involvement. The statement was considered somewhat low in directness (score of 3 was given) because it is repetitive. The statement was considered unclear (a score of 2 was given) because the person appeared to struggle to formulate a devil’s advocate argument. We measured inter-rater reliability between the two coders using the two-way random effects model measuring consistency. It was very good for “details” (single measures ICC = .92) and sufficient for all other variables: “pro-arguments” (average measures ICC = .75), “anti-arguments” (average measures ICC = .61), “plausibility” (average measures ICC = .73), “immediacy” (average measures ICC = .65), “directness” (average measures ICC = .66), “clarity” (average mMeasures ICC = .68), and “verifiable sources” (average measures ICC = .86). For “details”, we used the codings of the coder who coded all 150 transcripts. For the remaining variables we averaged the scores of the two coders and used these average scores in the analyses. Our dependent variables differed slightly from those used by Leal et al. (2010). We measured number of words, details and arguments, whereas Leal et al. (2010) only measured number of words. We thought that number of details and arguments to be more direct measurements of the confirmation bias than number of words. Apart from plausibility and immediacy, Leal et al. (2010) measured being talkative, emotional involvement, and latency time (thinking time between question and answer), whereas we measured directness and clarity. We thought that being talkative was redundant because we already measured the number of words, details and arguments. We found emotional involvement and latency time not suitable in the present experiment. These are nonverbal measurements whereas our coding took place on the transcripts. Motivation, Preparation Thoroughness, Preparation Time, Rapport, Percentage of Truth Telling, and Likelihood of Having to Write a Statement A 2 (Veracity: true vs. lie) x 2 (Order: DAA-VA vs. VA-DAA) between-subjects MANOVA was carried out with motivation, preparation thoroughness, preparation time, rapport, percentage of truth telling, and likelihood of having to write a statement as dependent variables. At a multivariate level, the Veracity effect was significant, F(6, 139) = 42.10, p < .001, ηp2 = .65, but the Order main effect, F(6, 139) = 0.88, p = .512, ηp2 = .04, and the Veracity x Order interaction effect, F(6, 139) = 1.55, p = .166, ηp2 = .06, were not significant. At a univariate level, only the Veracity effect for percentage of truthfulness was significant, F(1, 144) = 250.34, p < .001, d = 2.59, 95% CI [2.12, 2.98]. Truth tellers reported a higher percentage of truth telling (M = 96.27, SD = 14.68) than lie tellers (M = 28.08, SD = 34.18). The participants rated themselves as very motivated (M = 4.38, SD = 0.74 on a 5-point scale). They found their preparation somewhat thorough (M = 4.89, SD = 1.43 on a 5-point scale) and their preparation time sufficient (M = 6.09, SD = 1.11 on a 7-point scale). Participants also reported good rapport with the interviewer (M = 5.35, SD = 0.96 on a 7-point scale) and were somewhat uncertain whether they had to write a statement (M = 3.48, SD = 1.70 on a 7-point scale). Hypotheses 1, 2, and 3 Hypotheses 1 to 3 did not make a distinction between the eliciting opinion and devil’s advocate questions. For the analyses the responses to these two questions are merged. That is, for the frequency variables (number of words, details, pro-arguments, and anti-arguments) the responses for the two questions were combined (e.g., number of words in eliciting opinion question + number of words in devil’s advocate question). For the 7-point Likert scale variables (plausibility, immediacy, directness, and clarity) the scores to the two questions were averaged (e.g., [plausibility in eliciting opinion question + plausibility in devil’s advocate question]/2). A 2 (Veracity: truth vs lie) x 2 (Order: DAA-VA vs. VA-DAA) between-subjects MANOVA was carried out with (i) number of words, (ii) number of details, (iii) number of pro-arguments, (iv) number of anti-arguments, (v) verifiable sources, (vi) plausibility, (vii) immediacy, (viii) directness, and (ix) clarity as dependent variables. At a multivariate level, the main Veracity effect, F(9, 138) = 1.31, p = .235, ηp2 = .08, main Order effect, F(9, 138) = 1.80, p = .074, ηp2 = .11, and Veracity x Order interaction effect, F(9, 138) = 0.69, p = .721, ηp2 = .04, were all not significant. This means that we found no support for Hypotheses 1 to 3. Hypothesis 4 Hypothesis 4 made a distinction between the eliciting opinion and devil’s advocate questions. To test this hypothesis the responses for the frequency and Likert scale variables from the devil’s advocate question were subtracted from the responses to the eliciting opinion question (e.g., number of words in eliciting opinion response minus number of words in the devil’s advocate response; plausibility score in eliciting opinion response minus plausibility score in devil’s advocate response). A 2 (Veracity: truth vs lie) x 2 (Order: DAA-VA vs. VA-DAA) between-subjects MANOVA was carried out with the eight variables listed in Table 1 as dependent variables. At a multivariate level, the main Veracity effect, F(8, 139) = 4.12, p < .001, ηp2 = .19, and main Order effect, F(8, 139) = 2.20, p = .031, ηp2 = .11, were both significant whereas the Veracity x Order interaction effect, F(8, 139) = 1.21, p = .299, ηp2 = .07, was not significant. Table 1 Inferential Statistics for the Dependent Variables Testing Hypothesis 4 as a Function of Veracity and the Devil’s Advocate Approach Questions   Note. NHST = null-hypothesis significance testing. At a univariate level, none of the order effects were significant, all Fs < 3.08, all ps > .81. The univariate veracity results are presented in Table 1. The difference in number of words between the eliciting opinion and devil’s advocate question (more words uttered in the eliciting opinion answer) was more pronounced in lie tellers than in truth tellers. This contradicts Hypothesis 4. The differences in plausibility, immediacy, directness, and clarity were more pronounced in truth tellers than in lie tellers (answers to eliciting opinion question sounded more plausible, immediate, direct, and clear than answers to the devil’s advocate question). This latter set of findings supports Hypothesis 4. We carried out two more MANOVAs. Rather than using the difference scores as dependent variables, we examined the answers to the eliciting opinion question in the first MANOVA and the answers to the devil’s advocate question in the second MANOVA. The 2 (Veracity: truth vs. lie) x 2 (Order: DAA-VA vs. VA-DAA) between-subjects MANOVA for the eliciting opinion question revealed a significant main effect for Veracity, F(8, 139) = 2.65, p = .010, ηp2 = .13. The main Order effect, F(8, 139) = 1.90, p = .065, ηp2 = .10, and Veracity x Order interaction effect, F(8, 139) = 1.01, p = .435, ηp2 = .06, were not significant. Table 2 shows one significant univariate veracity effect: lie tellers reported more pro-arguments than truth tellers. The 2 (Veracity: truth vs. lie) X 2 (Order: DAA-VA vs. VA-DAA) between-subjects MANOVA for the devil’s advocate question revealed a significant main effect for Veracity, F(8, 139) = 2.55, p = .013, ηp2 = .13. The main Order effect, F(8, 139) = 1.94, p = .058, ηp2 = .10, and Veracity x Order interaction effect, F(8, 139) = 0.59, p = .782, ηp2 = .03, were not significant. Table 2 shows three significant univariate veracity effects: lie tellers’ answers sounded more plausible, more direct, and clearer than truth tellers’ answers. Devil’s Advocate Approach The devil’s advocate lie detection approach was examined in Hypothesis 4 where we examined the differences in answers to the eliciting opinion and devil’s advocate questions for truth tellers and lie tellers. These differences were more pronounced for truth tellers than for lie tellers (in terms of plausibility, immediacy, directness, and clarity) and supported Hypothesis 4. It also replicated the plausibility and immediacy findings of Leal et al. (2010) and the clarity findings of Mann et al. (2022), whilst directness was not measured in Leal et al. (2010) and Mann et al. (2022). Note that these results were obtained when comparing two responses from each participant. Practitioners prefer such within-subjects measures (Nahari et al., 2019; Vrij, Fisher, et al., 2022), because it controls for individual differences in, for example, eloquence (Vrij, 2016). Table 2 showed that the effects were caused by the devil’s advocate question, as lie tellers’ answers sounded more thought through (plausible, direct, and clear) than truth tellers’ answers. Thus, lie tellers’ answers to the devil’s advocate question rather than their answers to the eliciting opinion question revealed deception. Table 2 Inferential Statistics for the Dependent Variables Testing Hypothesis 4 as a Function of Veracity and the Devil’s Advocate Approach Questions   Note. NHST = null-hypothesis significance testing. Hypothesis 4 was not supported for the number of words, details, and arguments truth tellers and lie tellers provided. On the contrary, lie tellers provided more words and more pro-arguments than truth tellers to the eliciting opinion question, perhaps reflecting a desire to talk, so that their answers don’t appear too short. Someone could argue that these are more quantitative measures and that plausibility, immediacy, directness, and clarity reflect the quality of the statement more. Following this reasoning, the quality of the statements rather than the quantity of the statements revealed deception. This finding replicates Vrij, Deeb, et al.’s (2022) findings who also examined lying about opinions albeit not through the devil’s advocate approach. A possible explanation for this pattern of results (quality more than quantity reveals deception) is that lie tellers find it easier to add quantity than quality to their opinion statements. The same pattern of results does not occur when interviewees discuss their alleged activities, because in those settings the quantitative measure ‘total details’ indicates deception (Amado et al. 2016; Gancedo et al., 2021). Lie tellers may find it easier to make false claims about opinions than about activities. When discussing alleged activities lie tellers are concerned that such details give leads to investigators (Nahari et al., 2014), which is a reason to avoid providing details. Discussing opinions is less likely to result in leads to investigators which will make lie tellers less reluctant to express them. This could be examined in future research. Verifiability Approach The verifiable sources variable did not discriminate between truth tellers and lie tellers, neither did the moment when the verifiable sources question was asked in the interview (before or after the two devil’s advocate approach questions) influenced the results. Truth tellers reported on average M = 0.92 verifiable sources (SD = 1.08) and lie tellers M = 0.84 (SD = 0.99). The average score of truth tellers is low, which could explain the lack of a veracity effect (floor effect). We expected that truth tellers would discuss their opinions about societal issues with others (and thus include these individuals as verifiable sources in their account) more than they actually did. Alternatively, perhaps the question we asked was not sensitive enough to elicit verifiable sources from truth tellers. We just asked participants for a ‘named person’. Maybe if we had elaborated on the question, truth tellers could have thought more of discussions they had with others on the topic. Methodological Considerations Four methodological issues merit discussion. First, following Leal et al.’s (2010) design, the devil’s advocate question always followed the eliciting opinion question. This is the most logical order of asking these questions in an interview. In fact, asking the devil’s advocate question first could be met with confusion. For the same ‘avoiding confusion’ reason, the ACID interview protocol always starts with a free recall request in which interviewees report the event in chronological order before they are asked to recall the same event in reverse order (Colwell et al., 2015). Of course, the answer to the eliciting opinion question may affect the devil’s advocate answer. We do not consider this to be problematic, because the order currently used in research does reveal veracity differences. Despite this, it could be worth to examine a possible order effect by counterbalancing the order of the eliciting opinion and devil’s advocate questions in a future experiment. Second, this experiment was carried out entirely online. Such experiments are valuable given the Covid-19 situation. We are not aware of experimental research examining the effect of online interviewing on volunteering information, neither do we have well-defined ideas what that effect may be. In the present experiment we replicated Leal et al.’s (2015) findings who carried out a face-to-face experiment. Although comparing the results of two experiments is problematic, the replication suggests that similar results can be obtained with the devil’s advocate approach in face-to-face and online interviews. Third, someone may argue that in the devil’s advocate approach lie tellers are not lying much because they give real arguments about a topic. The lie involves presenting a pro-argument as an anti-argument and vice versa. The self-reported truthfulness score amongst lie tellers (they reported M = 28.08% of truth telling compared to truth tellers M = 96.27) indicates that they felt they were lying considerably. Fourth, unlike Leal et al. (2015), we did not ask observers to listen to the interviews to examine whether they could detect the truths and lies. Such a lie detection experiment would increase the ecological validity of the present findings. Conflict of Interest The authors of this article declare no conflict of interest. Cite this article as: Leal, S., Vrij, A., Deeb, H., Dabrowna, O., & Fisher, R. P. (2023). Combining the devil’s advocate approach and verifiability approach to assess veracity in opinion statements. European Journal of Psychology Applied to Legal Context, 15(2), 53-61.https://doi.org/10.5093/ejpalc2023a6 Note 1 The variable Words was not included in Hypothesis 1 but was included in Hypothesis 4. This reflects the findings of Leal et. al. (2015) who did not find a Veracity main effect. References |

Cite this article as: Leal, S., Vrij, A., Deeb, H., Dabrowna, O., & Fisher, R. P. (2023). Combining the Devil’s Advocate Approach and Verifiability Approach to Assess Veracity in Opinion Statements. The European Journal of Psychology Applied to Legal Context, 15(2), 53 - 61. https://doi.org/10.5093/ejpalc2023a6

Correspondence: aldert.vrij@port.ac.uk (A. Vrij).

Copyright © 2026. Colegio Oficial de la Psicología de Madrid

e-PUB

e-PUB CrossRef

CrossRef JATS

JATS