Employment Interview Perceptions Scale

[Escala de evaluación de la percepción sobre las entrevistas de empleo]

Pamela Alonso and Silvia Moscoso

Universidad de Santiago de Compostela, España

https://doi.org/10.5093/jwop2018a22

Received 28 May 2018, Accepted 12 September 2018

Abstract

The objective of this research was to develop and validate the Employment Interview Perceptions Scale (EIPS). This scale evaluates two dimensions: perception of comfort during the interview and perception of the suitability of the interview for applicant evaluation. Two samples were used. The first one was composed of 803 participants, who evaluated their perceptions in an experimental context. The second sample consisted of 199 interviewees, who evaluated their perceptions in a real evaluation context. All participants evaluated their perceptions for two interview types (Structured Conventional Interview and Structured Behavioral Interview). The analyses confirmed the hypothesized factorial structure. The final version of the EIPS s 11 items, 6 of them make up the first factor, and 5 make up the second factor. Regarding the reliability of the two factors, high values were reported in the two samples.

Resumen

El objetivo de esta investigación era desarrollar y validar la escala de evaluación de la percepción de la entrevista de empleo. Esta escala fue creada para evaluar dos dimensiones: la percepción del confort en la entrevista y la percepción de la idoneidad de la entrevista para la evaluación de los candidatos. Para la validación de la escala se han empleado dos muestras. La primera estaba compuesta por 803 participantes, quienes evaluaron su percepción en un contexto experimental. La otra estaba compuesta por 199 entrevistados, que evaluaron su percepción en un contexto real de evaluación. Todos los participantes evaluaron su percepción de dos tipos de entrevista: la entrevista convencional estructurada y la entrevista conductual estructurada. Los análisis confirmaron la estructura factorial inicial. La versión final de la escala incluye 11 ítems, 6 de ellos componen el primer factor y 5 el segundo. Con respecto a la fiabilidad de ambos factores, se encontraron valores altos en las dos muestras empleadas.

Palabras clave

Entrevista, Percepción de los candidatos, Entrevista convencional, estructurada (ECO), Entrevista conductual, estructurada (ECE).

Keywords

Interview, Applicants’ perceptions, Structured Conventional, Interview (SCI), Structured Behavioral, Interview (SBI).

Cite this article as: Alonso, P., & Moscoso, S. (2018). Employment interview perceptions scale. Journal of Work & Organizational Psychology, 34, 203-212.

https://doi.org/10.5093/jwop2018a22

Funding: The research reported in this manuscript was supported by Grant PSI2014-56615-P from the Spanish Ministry of Economics and Competitiveness and Grant 2016 GPC GI-1458 from the Consellería de Cultura, Educación e Ordenación Universitaria, Xunta de Galicia. Correspondence: pamela.alonso@usc.es (P. Alonso).

Introduction The interest of industrial and organizational psychologists in applicant reactions has been increasing over the last three decades (Aguado, Rico, Rubio, & Fernández, 2016; Anderson, Salgado, & Hülsheger, 2010; Hausknecht, Day, & Thomas, 2004; Nikolaou, Bauer, & Truxillo, 2015; Rodríguez & López-Basterra, 2018; Truxillo, Bauer, & Garcia, 2017). Some evidence has shown that applicant reactions impact on organizational results, which has made organizational research focus its attention on applicants’ side too (Osca, 2007; Rynes, Heneman, & Schwab, 1980; Schuler, 1993). In fact, personnel selection is now understood as a bidirectional process, in which candidates’ opinions also matter (De Wolff & van der Bosch, 1984; Hülsheger & Anderson, 2009). This has led to a significant increase in the number of studies on this issue, especially on the perceptions of different selection tools and distributive and procedural justice in different countries and cultures (Anderson, Born, & Cunningham-Snell, 2001; Anderson et al., 2010; Bertolino & Steiner, 2007; Hausknecht et al., 2004; Moscoso & Salgado, 2004; Steiner & Gilliland, 1996). Taking into account that the employment interview is the most used selection tool (Alonso, Moscoso, & Cuadrado, 2015), applicants’ perceptions about this instrument can have an important impact on their perceptions of the hiring process. This means that further research on this issue is necessary. The main aim of this research was the development and validation of a scale for learning more about applicants’ perceptions of the employment interview. Applicants’ Perceptions Hausknecht et al. (2004) consider that applicants’ perceptions are formed by the set of their opinions about diverse dimensions of organizational justice, their thoughts, and feelings about assessment instruments, and about personnel selection in general. At the same time, applicants’ perceptions establish the basis for their subsequent psychological processes. If their perceptions are positive, this will have a favorable effect on applicants’ reactions. On the contrary, if their perceptions are negative, there is a possibility that the selection process will end up failing since, for example, applicants could react by rejecting the position or taking legal action against the organization. The possibility that candidates may react negatively to the selection process and its possible consequences is what has caused the interest in this area to increase. In fact, Anderson (2004) and Hausknecht et al. (2004) have highlighted six reasons why applicants’ perceptions should be considered by companies:

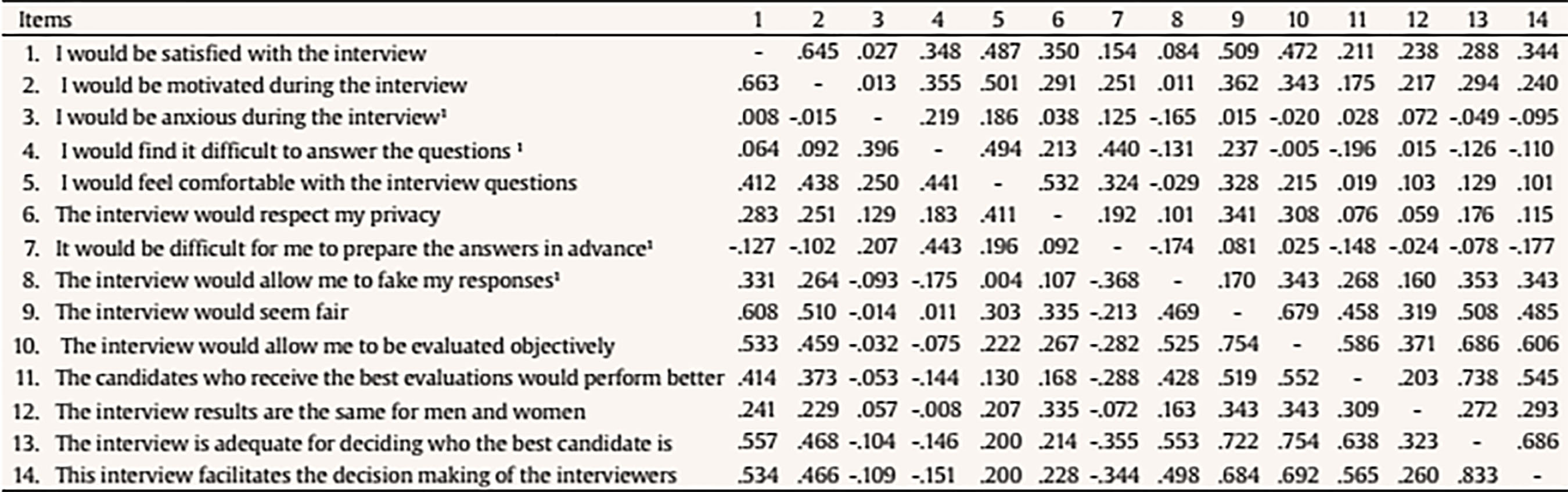

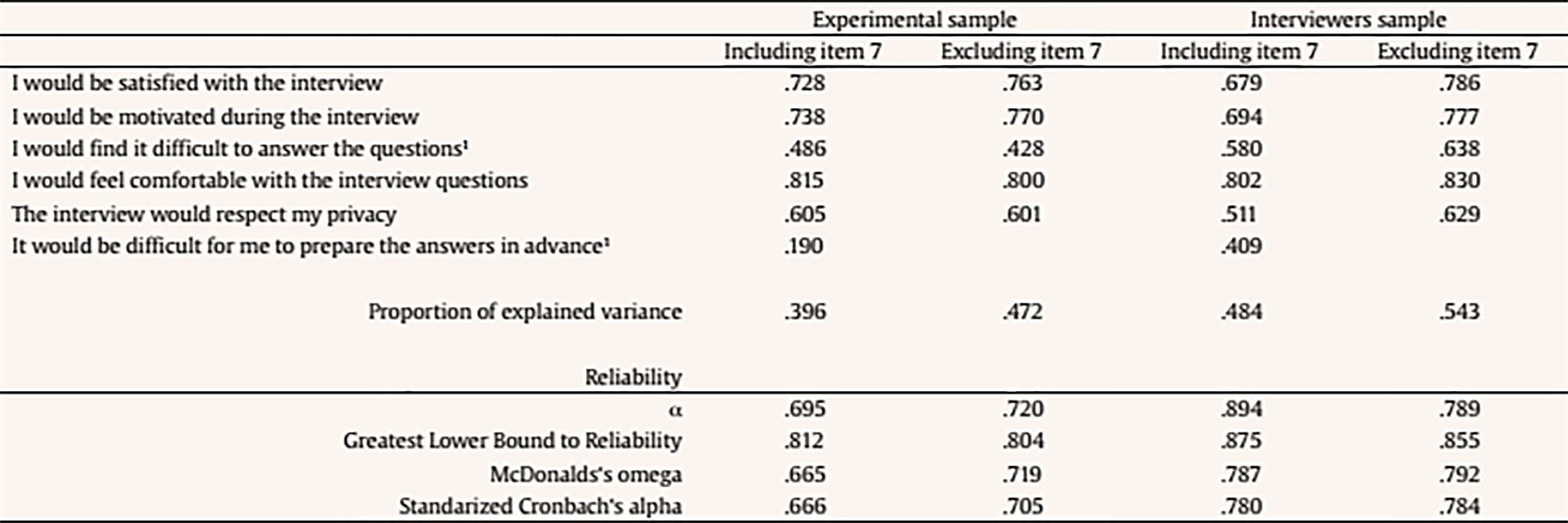

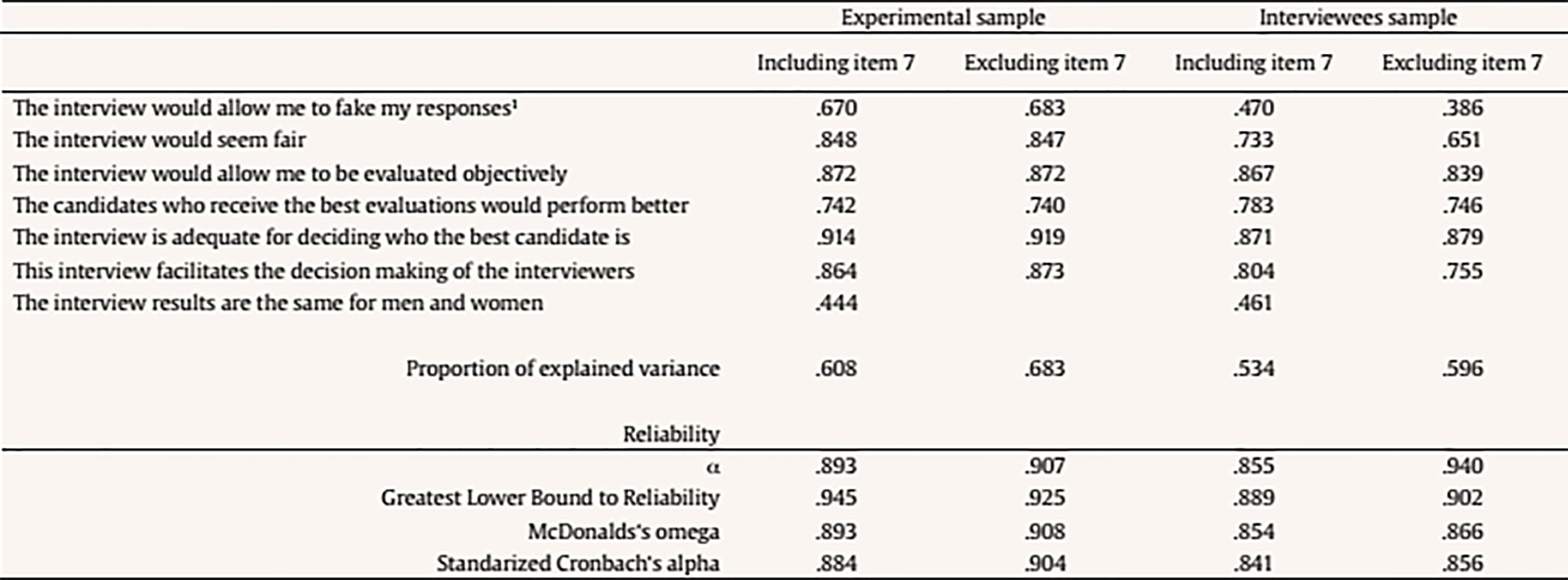

One of the most important contributions to the understanding of applicants’ reactions is Hausknecht et al.’s (2004) meta-analysis. These authors studied the relationship between perceptions of procedure’s characteristics with perceptions of justice and other outcomes, such as offer acceptance intentions, organizational attractiveness, and recommendation intentions. The results showed that perception of procedure characteristics correlates positively with perceptions of procedural justice, distributive justice, motivation during testing, attitudes towards tests and attitudes toward selection process in general. Specifically, face validity and perception of predictive validity correlated positively and moderately with the way procedure fairness was perceived and with the perception of distributive justice, although effect sizes, in this case, were lower. In addition, they found that these characteristics had also a significant impact on attitudes toward testing. Furthermore, moderate relationships between some perceptions of procedure characteristics and offer acceptance intentions and organizational attractiveness were found. Considering these meta-analytic results, we can assume that applicants’ perceptions of selection tools would have a considerable impact on their perceptions of the selection process and on their reactions. Preferences Regarding Selection Instruments Applicants’ reactions to selection tools have been the object of a series of studies carried out in many countries. Hausknecht et al. (2004) carried out a meta-analysis including primary studies published up to that date. The results showed that interview was the best-evaluated method, followed by work sample tests, curricula, references, and cognitive abilities tests. Subsequently, Anderson et al. (2010) published a new meta-analytical review with a broader sample of primary studies from 17 countries. In this research, in addition to analyzing job seekers’ general perception of the 10 best-known selection tools, they examined the generalization of results of applicants’ reactions. The results were very similar to those found by Hausknecht et al. (2004), selection interview being the second best-perceived tool after work sample tests. Additionally, they confirmed the generalization of applicants’ reactions. The results shown in these two meta-analyses referred to the interview as a single tool, even though in practice there are different types of interviews. In fact, applicants could perceive interview differently depending on its content or degree of structure. Therefore, it is crucial to examine whether there are differences in applicants’ perceptions of different types of employment interviews. Applicants’ Perception of Different Types of Interviews Three main interview types can be distinguished according to their content and degree of structure: (1) Unstructured Conventional Interview (UCI), in which the interviewer does not follow any script and formulates different questions to different interviewees depending on the course of their conversation (Dipboye, 1992, 1997; Goodale, 1982); (2) Structured Conventional Interview (SCI), in which a script or a series of guidelines about the information that must be obtained from each interviewee are used by the interviewer and; (3) Behavioral Interview (BI), which is the interview type with the highest degree of structure, and includes questions based on applicants’ behaviors. This type of interview can be divided into two sub-types: (a) Structured Situational Interview (SSI), in which the interviewees are asked about how they would perform in a hypothetical situation (Latham, Saari, Pursell, & Campion, 1980), and (b) Structured Behavioural Interview (SBI), which is based on the evaluation of applicants’ past behaviors (Janz, 1982, 1989; Motowidlo et al., 1992; Salgado & Moscoso, 2002, 2014). Despite the existence of all these alternatives for the interview process, meta-analytical results have only recommended the use of SCIs or BIs for hiring decisions. Specifically, these results have shown better psychometric results, in terms of reliability and criterion validity, for interviews with a higher degree of structure, that is BIs (e.g., Huffcutt & Arthur, 1994; Huffcutt, Culbertson, & Weyhrauch, 2013, 2014; McDaniel, Whetzel, Schmidt, & Maurer, 1994; Salgado & Moscoso, 1995, 2006). Additionally, the conclusions of several primary studies about other important implications of the use of different types of interview (for example, their resistance to bias, the degree to which interviewers feel confident about their decisions, the probability of their producing adverse impact, their economic utility, etc.), also recommended the use of the most structured ones (Alonso, 2011; Alonso & Moscoso, 2017; Alonso, Moscoso, & Salgado, 2017; Rodríguez, 2016; Salgado, 2007). However, although literature about interview effectiveness for hiring decisions is extensive, there are few studies on applicant reactions to different types of interviews. Rynes, Barber, and Varma (2000) pointed out that new research was necessary on this, considering interview structure and interview content. The main results found in the research conducted on this subject are summarized below. One of the first studies conducted on this topic was that of Latham and Finnegan (1993). These authors analyzed the perceptions of UCI, SCI, and SSI. They found that the applicants preferred UCI to SSI, because they felt that UCI allowed them to relax, say what they wanted, and that this interview gave them the possibility of influencing its course and especially of showing their motivation. Additionally, Janz and Mooney (1993) analyzed differences between perception of SCI and SBI. The only significant differences found were that SBI was perceived as more complete and exhaustive and that they considered that it had been prepared with a clearer knowledge of the type of qualities required for the position. However, a few years later, Conway and Peneno (1999) found that candidates had more favorable reactions to conventional questions than to situational or behavioral questions. In addition, some researchers studied applicants’ reactions to BIs, specifically. Day and Carroll (2003) found that applicants perceived BI favorably. Furthermore, Salgado, Gorriti, and Moscoso (2007) analyzed justice reactions to SBI and found that it was perceived as a good tool for promotion decisions and better than other instruments for hiring processes in public administration. In summary, the results are scarce, so it is not possible to reach a clear conclusion. Therefore, more research is especially relevant considering that some results indicate that interviews with better psychometric properties could be the worst perceived by candidates (Conway & Peneno, 1999; Rynes et al., 2000). In addition, as pointed out by Levashina, Hartwell, Morgeson, and Campion (2014), employment interviews have a double objective: recruitment and selection, so it is of interest to clarify if the most efficient interviews could be failing from the point of view of applicants’ attraction towards the organization. Therefore, the main objective of this research was to design and validate a tool that would allow for the evaluation of applicants’ perceptions of employment interviews. Having this tool will allow us to continue advancing in the knowledge of applicants’ perceptions of different types of interviews. MethodSamples This study has been carried out with two independent samples. The first one was composed of 803 university students of various subjects related to the field of human resources; 65.3% were women and the mean age was 24.66 years (SD = 6.52); 63.8% of the sample participated in the study before having received specific training on personnel assessment. Participants evaluated their perceptions of two types of employment interview after having completed an academic exercise in which they had to evaluate two applicants for a job. Therefore, each participant evaluated their perceptions using the same scale twice. The second sample was composed of 199 students who were in the final year of their degree; 63.4% were engineering students, 28.6% labor relations students, and 8% students of a master’s degree in Psychology; 52.2% of the sample were women and the mean age was as in the previous sample. These participants evaluated their perceptions of two different types of interviews after being interviewed, that is, in a real context of evaluation. Therefore, they also used the same scale twice. Measure Employment Interview Perceptions Scale (EIPS). The first version of the scale was composed of 14 items, which were included to measure two factors: perception of interview comfort and perception of the interview’s suitability for applicants’ evaluation. Items were created taking as a reference other scales used in previous literature, such as Salgado et al. (2007) and Steiner & Gilliland (1996). The Spanish version of the scale was used for this validation study. Responders had to indicate their degree of agreement with each of the items using a 5-point Likert scale, in which 1 implied totally disagreeing with the evaluated statement and 5 totally agreeing. For a correct interpretation of results, scores were reversed in the following items: “I would be anxious during the interview”, “I would find it difficult to answer the questions”, “It would be difficult for me to prepare the answers in advance”, and “The interview would allow me to fake my responses”. Thus, a higher score on an item indicates a more favorable perception about the interview. Experiment Preparation Video recordings. For the study carried out with the first sample, the same recordings as in Alonso and Moscoso (2017) were used. That is, the videos showed the interviews of two applicants: one qualified and the other unqualified for the position of human resources technician. Each candidate was interviewed twice, once with an SCI, and the other one with an SBI. In addition, the roles of both applicants were played by an actor and an actress, which meant that 8 videos were recorded. The questions asked by the interviewers in both interviews aimed to evaluate the candidates in the dimensions of organization and planning, teamwork, problem-solving, and global assessment. However, the type of questions asked differed according to the nature of each interview. Interview scripts. Two interview scripts were used with the second sample. One for an SCI and another for an SBI. For each interview, the interviewers had the same script used in the videos of the first sample. Therefore, the questions that were included in both interviews also aimed to evaluate the interviewees on the dimensions of organization and planning, teamwork, problem-solving, and global assessment. Procedure With the first sample, participants’ perception of the interview was evaluated in an experimental context. As part of an academic exercise, the students had to evaluate two applicants for the position of human resources technician. The appraisal had to be made after watching the video of an applicant’s interview. These raters had seen, at least, one SCI and one SBI, and the order in which they watched the interviews was alternated between the different groups of participants. The perceptions assessment was made when the raters had evaluated the interviewee once the video of each interview had finished. For this, the participants were instructed to put themselves in an applicant’s position, so they had to think that they were also part of the same selection process, and that they would be evaluated with the same interview as in the video, which meant that the interviewer would ask them the same questions. These instructions were complemented with the indications that appeared printed in the questionnaire: “Suppose that you have presented yourself as an applicant for this selection process and you have been evaluated with the same interview you have just watched. Please, indicate your degree of agreement with the following statements.” In the case of the second sample, the interviewees’ perceptions of the two types of interviews were evaluated in a real evaluation context, that is, when they had just been interviewed. The students were participating in a Development Assessment Center (DAC) consisting of various typical tests of a personal selection process. The purpose for which the DAC was carried out was to facilitate the future incorporation of students into the labor market. The DAC allowed them to gain experience with different selection tools, to know how their candidatures could be perceived in a real evaluation process, and to help them, with the advice of some experts’, to improve their results in future selection processes. All the participants were interviewed twice. Therefore, a total of 398 interviews were carried out, of which 199 were SCIs and 199 SBIs. The team of interviewers consisted of six researchers, specialized in personnel selection and experienced in conducting interviews. Each participant was interviewed by two different interviewers. At the end of each interview, the interviewers gave the Employment Interview Perceptions Scale to the participant who had to fill it out at that time. Apart from interviewers’ instructions, which indicated the same thing as to the participants of the other sample, the questionnaire included the following indications: “Suppose that you are an applicant in a selection process, and the only test with which you will be evaluated is an interview like the one you have just done. Please, indicate your degree of agreement with the following statements.” ResultsA principal components analysis with Promax oblique rotation was carried out to verify the factorial structure of the Employment Interview Perceptions Scale (EIPS). Given that the main objective of this research was factorial analysis, all the analyses were carried out considering the two evaluations made by each participant (one for the SBI and one for the SCI) as independent evaluations. FACTOR Program (Lorenzo-Seva & Ferrando, 2018) was used for all the analyses. Following the advice of this software, polychoric correlations were used since some items showed asymmetric distributions and an excess of kurtosis. Table 1 shows the correlation coefficients between the fourteen items that composed the first version of the scale. Correlations for the experimental sample are presented below the diagonal, and correlations for the interviewees are presented above the diagonal. Correlations between most of the items are very similar in the two samples. Table 1 Correlation Matrix between the Items of the Employment Interview Perceptions Scale  Note. Correlations for the experimental context sample (n = 1,595) are presented below the diagonal, and correlations for the sample of the interviewees (n = 396) are presented above the diagonal. 1The item has been reversed. Looking for the best possible factorial solution, several analyses were carried out considering the elimination of some items. In the first analysis all the items were included, and some of the items were removed in the other analyses. The results found in these analyses are shown in Table 2. Factor analyses carried out confirm the existence of the two theoretical factors, on which the design of the scale had been based. To verify that the factorial structure was repeated in the two samples, a comparison of the factorial structures resulting was carried out using the Burt’s (1948) and Tucker’s (1951) factor congruence coefficient. Congruence coefficients (CC) between pairs of parallel factors corresponding to the sample formed by the participants who made their evaluation in an experimental context (sample a) and the sample of those who had just been interviewed (sample b) were the following: CCF1a_F1b = .95, for one of the factors, and CCF2a-F2b = .96, for the other. According to Cattell’s criterion (1978), coefficients of congruence equal to or greater than .93 are considered significant. Therefore, we can confirm that factorial structure was replicated in the two samples. Additionally, the reliability coefficients reported for factor 1 and factor 2 in the experimental sample were .842 and .946, respectively, and .854 and .905 in the other sample. Table 2 Factor Loading for Principal Components Analysis with Promax Rotation of the Employment Interview Perceptions Scale in the Two Samples, Reliability Coefficients, Inter-factor Correlations, and Burt & Tucker Congruence Coefficients (version without item 3)  Note. Factor loadings > .400 are in boldface. α = Internal consistency coefficient; CCBT F1a-F1b = congruency coefficient between the first factor in the two samples; CCBT F1a-F2b = congruency coefficient between the first factor in the first sample and the second factor in the second sample; CCBT F2a-F1b = congruency coefficient between the second factor in the first sample and the first factor in the second sample; CCBT F2a-F2b = congruency coefficient between the second factor in the two samples. 1The item has been reversed. Confirmatory factorial analyses separately for each factor were carried out to confirm an optimal scale. The factor of perception of interview comfort was initially composed of 7 items: (1) “I would be satisfied with the interview”, (2) “I would be motivated during the interview”, (3) “I would be anxious during the interview”, (4) “I would find it difficult to answer the questions”, (5) “I would feel comfortable with the interview questions”, (6) “The interview would respect my privacy”, and (7) “It would be difficult for me to prepare the answers in advance”, but the first factorial analysis suggested discarding item 3. Additionally, regarding the factorial loadings that Table 2 reported for the items “I would be satisfied with the interview” and “I would be motivated during the interview”, we believe that these items were interpreted in a different way depending on the situation. In fact, only in the real evaluation situation do the items load higher in this factor than in the other. However, in the experimental sample, when the participants had been raters before, they interpreted these items according to the suitability of the interview. This is totally understandable, since the situation in which they made their evaluations determined that their perceptions of their satisfaction with the interview and their motivation during the interview were conditioned by how they perceived its suitability. Given this result, we consider it appropriate to modify the wording of these two items in the following way: “I would be satisfied with my results in the interview” and “I would be motivated during the interview to get the best possible results”. Finally, the results of the confirmatory factorial analyses carried out only with the items that composed this factor, including and excluding the item “It would be difficult for me to prepare the answers in advance” are reported in Table 3. This table shows factorial loadings, proportions of explained variance, and several reliability coefficients (Cronbach’s alpha, greatest lower bound, McDonald’s omega, and standardized Cronbach’s alpha). Even considering the problems that the first two items presented in the experimental sample, the table shows favorable results from the point of view of the factorial loadings and the reliability. However, a scale excluding item 7 was confirmed to be more efficient, since the proportions of the explained variance and the reliability coefficients are higher using fewer items. Table 3 Factor Loadings in the Confirmatory Factor Analysis for the Factor of Perception of Interview Comfort, the Proportion of the Explained Variance, and Reliability Coefficients, in the Two Samples, Including and Excluding Item 7  Note.α = internal consistency coefficient. 1The item has been reversed. Concerning the factor of perception of the suitability of the interview for candidate evaluation, this was initially composed of 7 items: (1) “The interview would allow me to fake my responses”, (2) “The interview would seem fair”, (3) “The interview would allow me to be evaluated objectively”, (4) “The candidates who receive the best evaluations would perform better”, (5) “The interview is adequate for deciding who the best candidate is”, (6) “The interview facilitates the decision making of the interviewers”, and (7) “The interview results are the same for men and women”. However, Table 2 results suggested that this last item could be deleted from the scale. The results of the confirmatory factorial analyses carried out only with the items that composed this factor, including and excluding item 7, are reported in Table 4. Data recommended the use of the scale excluding this item, since the proportion of the explained variance and the different reliability coefficients reported were higher. Table 4 Factor Loadings in the Confirmatory Factor Analysis for the Factor of Perception of the Suitability of the Interview, the Proportion of the Explained Variance, and Reliability Coefficients, in the Two Samples, Including and Excluding Item 7  Note.α = internal consistency coefficient. 1The item has been reversed. In conclusion, the final version of the EIPS is composed of eleven items. The perception of interview comfort factor includes five items and the perception of the suitability of the interview for applicant evaluation factor includes six. The Spanish and English versions of the final scale are reported in Appendixes A and B, respectively. DiscussionThe main objective of this research was to develop and validate a scale that would allow for the evaluation of applicants’ perceptions of different types of employment interviews. Specifically, in addition to designing the content, it was intended to verify factorial structure and psychometric properties of the scale. The results confirm that the scale evaluates the two factors based on which it was designed, that is, perception of interview comfort and perception of the suitability of the interview. In addition, favorable results in terms of reliability were reported for both factors. A tool like the EIPS was necessary to study if there are differences between applicants’ perceptions of different types of interviews that can be used for personnel selection. Although studies carried out so far on candidates’ perceptions have shown very favorable results for this instrument, most of them have evaluated interview’s perceptions as a single instrument, when in practice there are different types of interviews with substantial differences among them. So, this is a field that needs to be studied more deeply. However, it should be remembered that the scale proposed in this research focuses only on the evaluation of applicants’ perceptions related to interview’s content and structure. The perceptions that applicants may have about the interview depend on several issues related not only to these two characteristics, but also to other variables, such as information provided to an interviewee before the interview, the impression that a candidate has of the interviewer, the interviewer’s warmth during the interview, feedback received, expectations based on the influence of peer communication, etc. (Geenen, Proost, Schreurs, van Dam, & von Grumbkow, 2013; Harris & Fink, 1987; Nikolaou & Georgiou, 2018; Rynes, 1991; Rynes et al., 2000). Although these are issues that also have a relevant role in applicants’ reactions (Hausknecht et al., 2004), they do not depend exclusively on the type of interview used, but will rather vary depending on the organization in which personnel selection is carried out or the identity of people in charge. Therefore, the evaluation of these aspects was not part of the objectives with which the EIPS was designed. Implications for Practice and Future Research EIPS will allow for the evaluation of perceptions that applicants have of interviews, so that we can continue advancing in the scientific knowledge on this issue. However, the present research only validates the Spanish version of EIPS. New studies using the English version are needed to confirm the same results. Additionally, the realization of primary studies using this scale, in any version, will allow us to know how different interviews are perceived and to compare the results between different interview formats. Also, these primary results could be integrated into future meta-analyses that would allow us to gain a more precise knowledge of this question. In addition, advances in the knowledge of applicant interview perceptions will contribute to the improvement of professional practice. The fact that the interviewer knows in advance possible applicant reactions to the interview that they will perform, will allow them to act accordingly. An example of this could be, during the opening phase of the interview, explaining to an interviewee the reason for the type of questions that you will be asking in order to carry out your evaluation, which could improve an applicant’s perception of interview’s suitability. Another example could be trying to reduce an interviewee’s anxiety in those kinds of interview that could be perceived as more difficult. Another contribution of this scale is the fact that it can be adapted to evaluate candidates’ perceptions of other selection tools. This would allow us to study specifically how comfort during other types of tests and their suitability for applicants’ assessment are perceived. In any case, it would be necessary to validate this adaptation of the scale, with the objective of confirming that, indeed, it meets the psychometric criteria necessary to be used for research purposes. In conclusion, this scale can contribute to a better understanding of applicants’ perceptions of the most important tool in personnel selection. We hope that the results of future research carried out using the EIPS will promote an improvement in personnel selection practice, in the interests of both practitioners and applicants. Cite this article as: Alonso, P. & Moscoso, S. (2018). Employment interview perceptions scale. Journal of Work and Organizational Psychology, 34, 203-212. https://doi.org/10.5093/jwop2018a22 Funding: The research reported in this manuscript was supported by Grant PSI2014-56615-P from the Spanish Ministry of Economics and Competitiveness and Grant 2016 GPC GI-1458 from the Consellería de Cultura, Educación e Ordenación Universitaria, Xunta de Galicia. References |

Cite this article as: Alonso, P., & Moscoso, S. (2018). Employment interview perceptions scale. Journal of Work & Organizational Psychology, 34, 203-212.

https://doi.org/10.5093/jwop2018a22

Funding: The research reported in this manuscript was supported by Grant PSI2014-56615-P from the Spanish Ministry of Economics and Competitiveness and Grant 2016 GPC GI-1458 from the Consellería de Cultura, Educación e Ordenación Universitaria, Xunta de Galicia. Correspondence: pamela.alonso@usc.es (P. Alonso).

Copyright © 2026. Colegio Oficial de la Psicología de Madrid

e-PUB

e-PUB CrossRef

CrossRef JATS

JATS